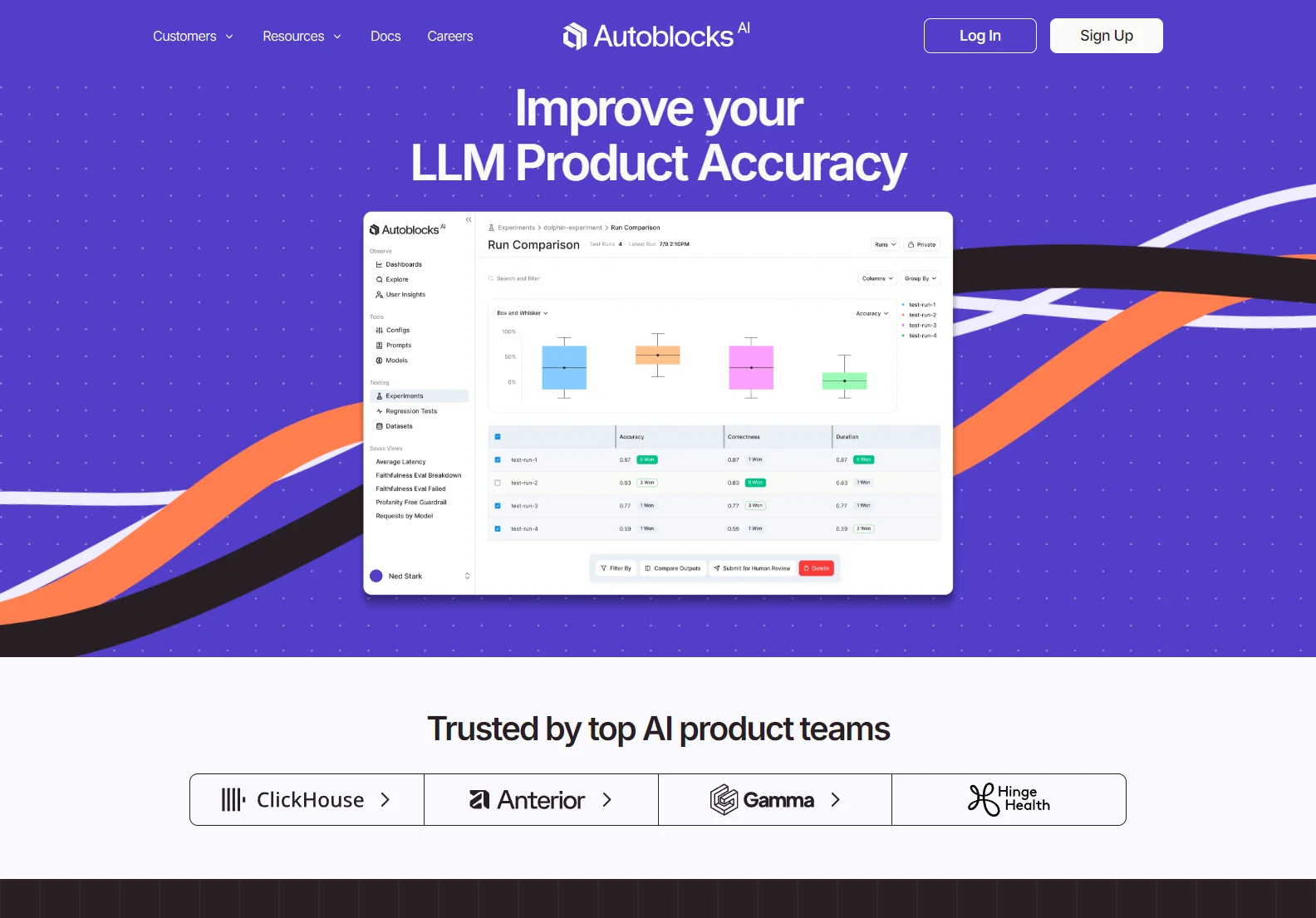

Autoblocks: Revolutionizing GenAI Product Development

Autoblocks is a collaborative testing and evaluation platform designed to significantly improve the accuracy of large language model (LLM) products. It brings together entire teams, empowering them with expert-driven testing and evaluation processes. The platform's unique strength lies in its ability to continuously learn and improve through user and expert feedback, leading to better tests and ultimately, better products.

Key Features and Benefits

Autoblocks offers a comprehensive suite of features to streamline the entire GenAI product development lifecycle:

- Enhanced Testing: Align your tests more closely with real-world scenarios, creating more accurate and reliable results.

- High-Quality Datasets: Curate and manage high-quality test datasets to ensure the robustness of your evaluations.

- Real-time Monitoring: Keep a pulse on your production environment with integrated observability tools, allowing for proactive issue identification.

- Valuable Test Case Identification: Leverage user feedback and online evaluations to pinpoint critical test cases that expose weaknesses in your LLM.

- Collaborative Experimentation: Utilize flexible SDKs to seamlessly integrate Autoblocks into your existing workflow, allowing for collaborative experimentation and iterative improvements.

- Metric Alignment: Empower experts to provide detailed feedback, enabling the alignment of automated evaluation metrics with human preferences.

- Seamless Integration: Integrate effortlessly with any codebase and framework, thanks to the platform's flexible SDKs.

- Comprehensive Management: Manage prompts, configurations, and custom models efficiently within the platform.

- Human-in-the-Loop Feedback: Empower subject-matter experts and non-technical stakeholders to contribute valuable insights, enhancing accuracy and refining the LLM's performance.

Core Modules

Autoblocks is structured around several core modules to address the diverse needs of GenAI product development:

- Testing & Evaluation: Turbocharge your local testing and experimentation process for optimal results.

- Monitoring & Guardrails: Configure online evaluations and guardrails to ensure a safe and trustworthy user experience.

- Debugging: Quickly identify and resolve issues with powerful debugging tools, facilitating rapid prototyping of solutions.

- AI Product Analytics: Connect AI product state to user outcomes, proactively identifying areas for improvement.

- Prompt Management: Collaborate on prompts efficiently while maintaining code integrity.

- RAG & Context Engineering: Optimize your context pipeline for accurate and relevant outputs.

- Security & Scalability: Built with robust security and scalability in mind to meet the most demanding requirements.

Autoblocks vs. Competitors

While several platforms offer aspects of what Autoblocks provides, its unique combination of collaborative testing, continuous improvement through feedback, and seamless integration sets it apart. Unlike many competitors focusing solely on one aspect of LLM development, Autoblocks provides a holistic solution, streamlining the entire process from testing to deployment and beyond. This integrated approach reduces development time, improves product quality, and ultimately leads to a more efficient and effective GenAI product development lifecycle.

Conclusion

Autoblocks represents a significant advancement in GenAI product development. By fostering collaboration, leveraging user feedback, and providing a comprehensive suite of tools, it empowers teams to build more accurate, reliable, and user-friendly LLM-powered products. Its focus on continuous improvement and seamless integration makes it an invaluable asset for any organization striving for excellence in the rapidly evolving field of generative AI.