The Local AI Playground: Run AI Models Offline

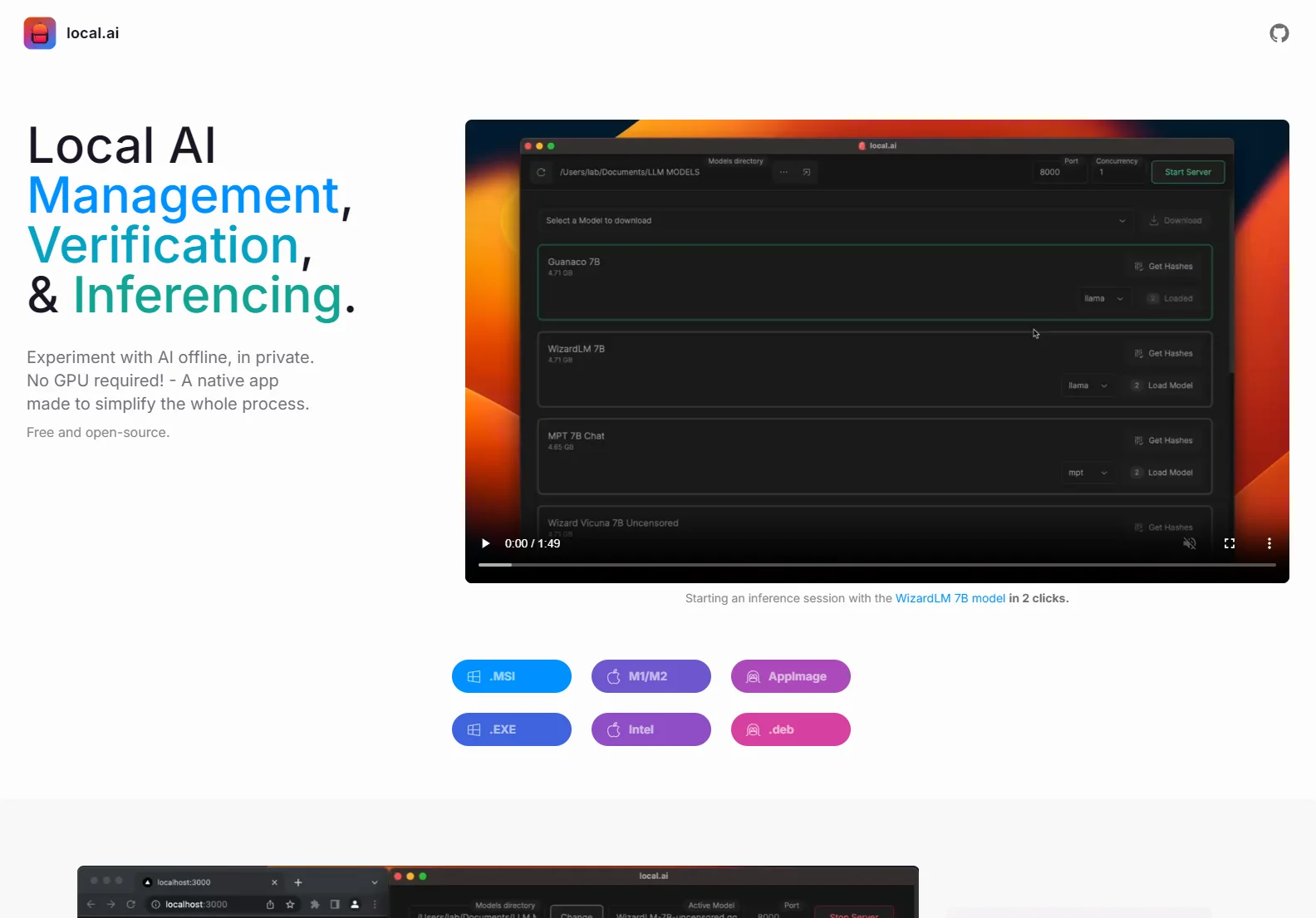

Local.ai is a native application designed for managing, verifying, and running AI models offline. It simplifies the process of experimenting with AI without needing a GPU, making it accessible to a wider range of users. The app is free and open-source.

Key Features

Model Management: Local.ai provides a centralized location to manage your AI models. You can easily add, organize, and track your models from any directory.

- Resumable Downloads: Downloading models is efficient and can be resumed if interrupted.

- Concurrent Downloads: Download multiple models simultaneously.

- Usage-Based Sorting: Models are sorted based on usage frequency.

- Directory Agnostic: Works with models stored in any directory.

Digest Verification: Ensure the integrity of your downloaded models using BLAKE3 and SHA256 digest verification. This helps prevent using corrupted or tampered-with models.

Inferencing: Run inference sessions quickly and easily. The app supports CPU inferencing and adapts to the available threads. It also supports GGML quantization (q4, 5.1, 8, f16).

- Inferencing Server: Start a local streaming server for AI inferencing with a simple, user-friendly interface.

- Supports various model formats: .MSI, .EXE, M1/M2, Intel AppImage, .deb

Upcoming Features

- GPU Inferencing: Support for GPU acceleration is planned for future releases.

- Parallel Sessions: Run multiple inference sessions concurrently.

- Nested Directory Support: Organize models within nested directories.

- Custom Sorting and Searching: Advanced options for sorting and searching models.

- Model Explorer: A visual explorer to browse and manage models.

- Model Search and Recommendation: Intelligent search and recommendations for models.

- Server Management: Enhanced management tools for the inferencing server.

Comparison to Other AI Tools

Unlike many cloud-based AI solutions, Local.ai emphasizes privacy and offline functionality. This eliminates concerns about data transfer and reliance on internet connectivity. It offers a comparable level of functionality to other AI tools but with the added benefit of local processing.

Conclusion

Local.ai is a powerful and versatile tool for anyone looking to work with AI models offline. Its user-friendly interface, robust features, and open-source nature make it a valuable asset for developers, researchers, and anyone interested in exploring the world of AI.