stabilityai/StableBeluga2: A Comprehensive Guide

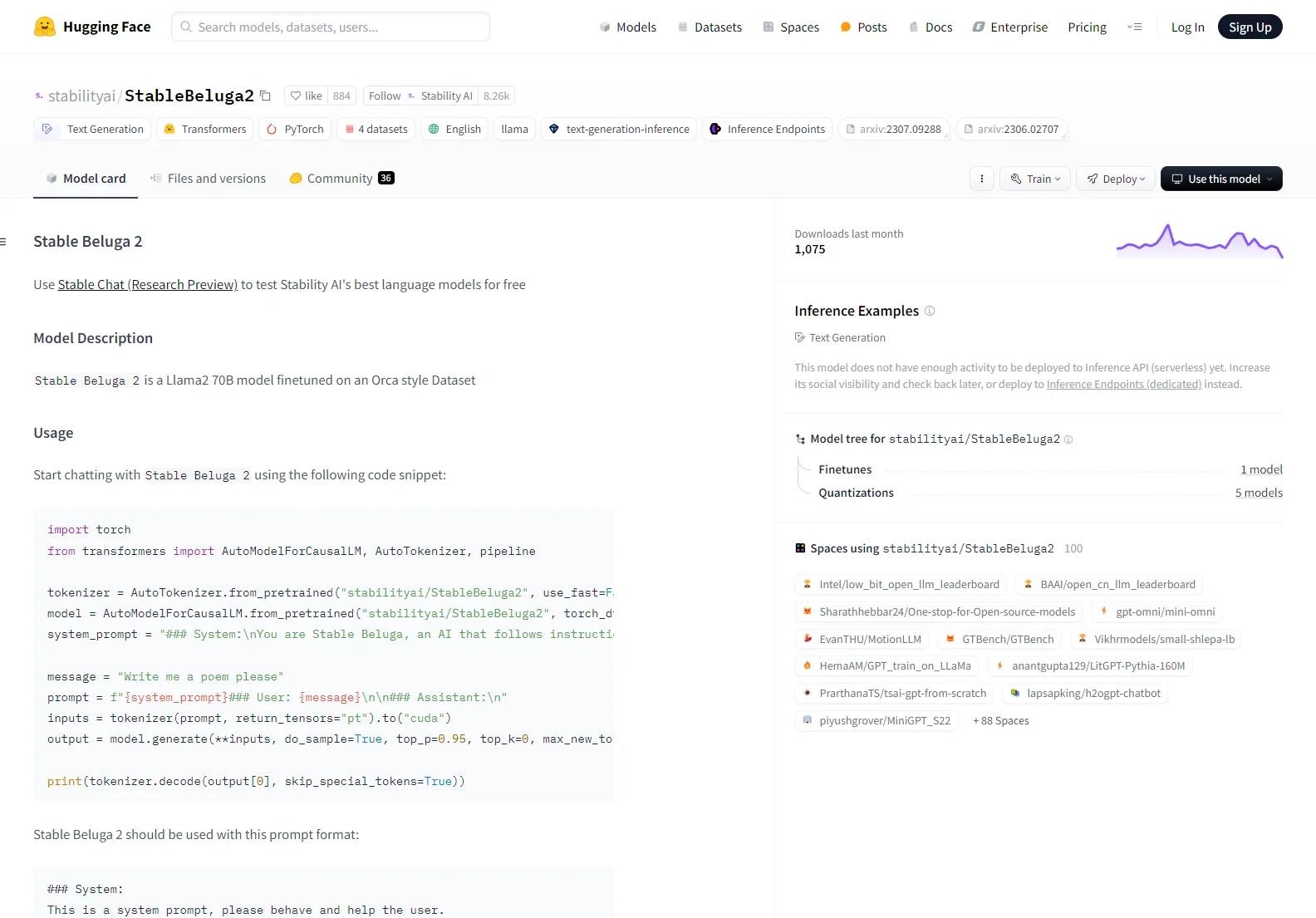

Stable Beluga 2 is a powerful large language model (LLM) developed by Stability AI. Built upon the Llama 2 70B architecture and fine-tuned using an Orca-style dataset, Stable Beluga 2 excels at generating high-quality text, following instructions precisely, and engaging in natural-sounding conversations. This guide will explore its capabilities, limitations, and applications.

Key Features

- High-Quality Text Generation: Stable Beluga 2 produces coherent, fluent, and contextually relevant text, making it suitable for various creative writing tasks, such as poem generation, story writing, and script creation.

- Instruction Following: The model is adept at following instructions accurately, ensuring that the generated text aligns with user requests.

- Natural Conversation: Stable Beluga 2 can engage in natural and engaging conversations, making it ideal for chatbot applications and interactive AI experiences.

- Llama 2 Foundation: Leveraging the robust Llama 2 70B architecture provides a strong foundation for Stable Beluga 2's performance and capabilities.

- Orca-Style Dataset Fine-tuning: The fine-tuning process using an Orca-style dataset enhances the model's ability to understand and respond to complex instructions and prompts.

Use Cases

Stable Beluga 2's versatility makes it applicable across various domains:

- Creative Writing: Generate poems, stories, scripts, and other creative text formats.

- Chatbots: Develop engaging and informative chatbots for customer service, education, or entertainment.

- Content Creation: Assist in generating marketing copy, articles, and other forms of written content.

- Research and Development: Explore new applications of LLMs and contribute to the advancement of AI technology.

Limitations and Ethical Considerations

While Stable Beluga 2 offers significant capabilities, it's crucial to acknowledge its limitations:

- Potential for Bias: Like other LLMs, Stable Beluga 2 may exhibit biases present in its training data. Careful monitoring and mitigation strategies are necessary.

- Unpredictable Outputs: The model's responses can be unpredictable, potentially generating inaccurate, biased, or objectionable content. Thorough testing and safety measures are essential before deployment.

- Limited Contextual Understanding: While improved, the model may still struggle with highly nuanced or complex contexts.

Comparisons with Other LLMs

Stable Beluga 2 stands out due to its fine-tuning on an Orca-style dataset, which focuses on complex instruction following. Compared to other Llama 2-based models, it demonstrates improved accuracy and adherence to user instructions. However, direct comparisons require benchmarking against specific tasks and metrics.

Conclusion

Stable Beluga 2 represents a significant advancement in LLM technology. Its ability to generate high-quality text, follow instructions precisely, and engage in natural conversations makes it a valuable tool for various applications. However, developers must carefully consider its limitations and implement appropriate safety measures before deployment.