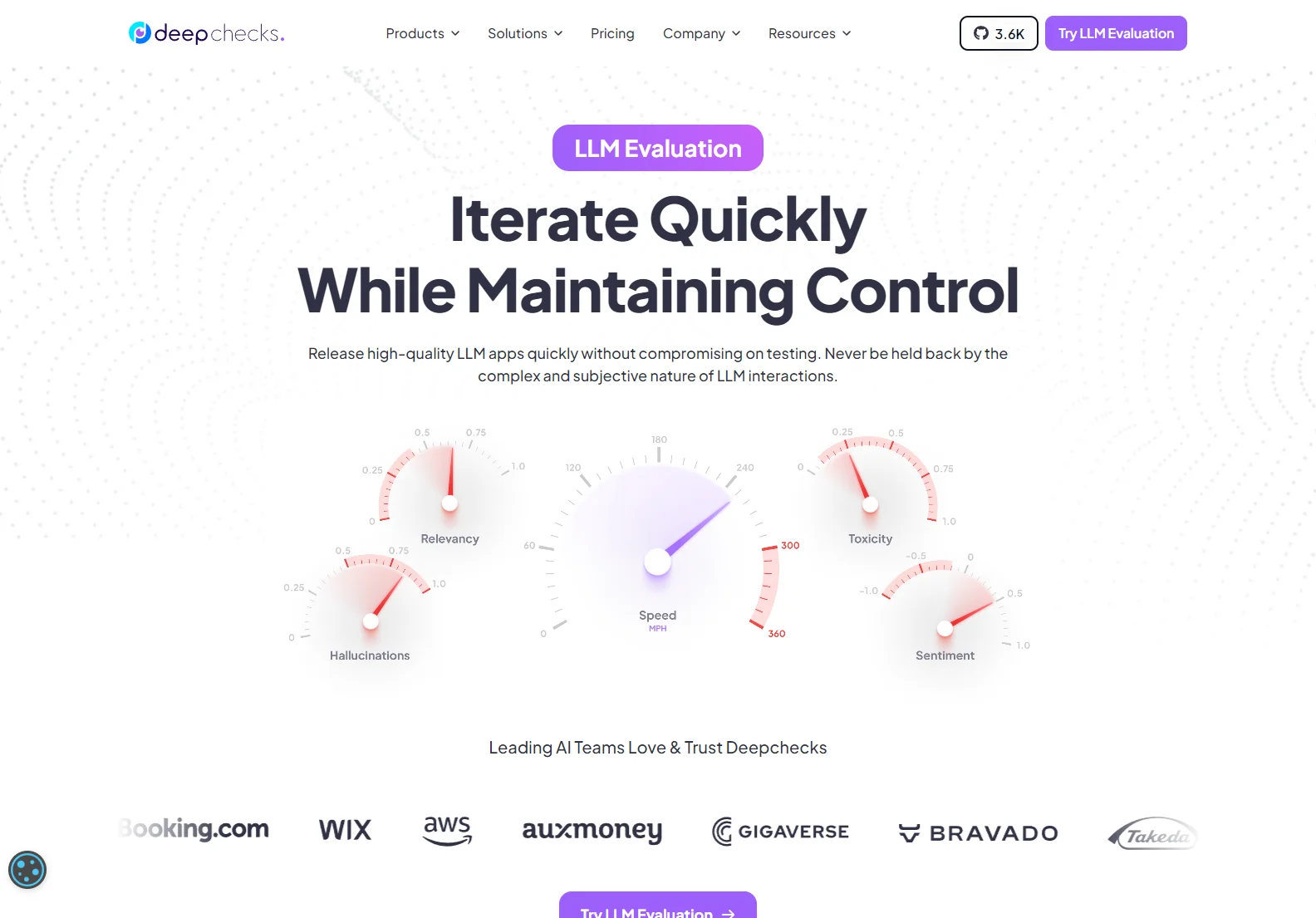

Deepchecks: Revolutionizing LLM Evaluation for High-Quality AI Apps

Deepchecks is a powerful platform designed to streamline and enhance the evaluation process for Large Language Model (LLM) applications. It addresses the inherent complexities of evaluating subjective AI outputs, ensuring that your LLM-based apps meet the highest standards of quality, compliance, and user experience. This comprehensive guide will explore Deepchecks' key features and benefits.

The Challenge of LLM Evaluation

Evaluating LLMs is notoriously difficult. Unlike traditional software, LLM outputs are inherently subjective and nuanced. A seemingly small change in wording can drastically alter the meaning or impact of the response. Manually reviewing and annotating a sufficient number of examples for a robust evaluation is time-consuming, expensive, and prone to human error.

Deepchecks tackles this challenge head-on by automating the evaluation process, allowing developers to quickly identify and address potential issues such as:

- Hallucinations: Instances where the LLM generates factually incorrect or nonsensical information.

- Bias: Unfair or discriminatory outputs reflecting biases present in the training data.

- Policy Deviation: Responses that violate predefined guidelines or company policies.

- Harmful Content: Outputs that are offensive, abusive, or otherwise inappropriate.

- Inconsistent Quality: Fluctuations in the quality of generated text across different inputs.

Deepchecks' Solution: Automated LLM Evaluation

Deepchecks employs a sophisticated approach to automate LLM evaluation, significantly reducing the time and resources required for thorough testing. Key features include:

- Automated Golden Set Generation: Deepchecks helps create and manage a comprehensive Golden Set (a test set specifically for GenAI), minimizing the need for extensive manual annotation. It provides "estimated annotations" that can be overridden as needed.

- Systematic Issue Detection: The platform systematically identifies and flags potential problems across various dimensions of LLM performance.

- Open Core Product: Deepchecks is built upon a robust and widely tested open-source foundation, ensuring reliability and scalability.

- Integration with AWS SageMaker: Deepchecks is now natively available within AWS SageMaker, simplifying integration into existing workflows.

Benefits of Using Deepchecks

By using Deepchecks, developers can:

- Iterate Faster: Quickly identify and fix issues, accelerating the development cycle.

- Maintain Control: Ensure consistent quality and compliance throughout the development process.

- Reduce Costs: Minimize the time and resources spent on manual evaluation.

- Improve User Experience: Deliver higher-quality, more reliable LLM-based applications.

Deepchecks for Various LLM Applications

Deepchecks is versatile and applicable to a wide range of LLM applications, including chatbots, content generation tools, and more. Its adaptability makes it an invaluable asset for any team building LLM-powered products.

Conclusion

Deepchecks is a game-changer for LLM evaluation. Its automated approach, coupled with its robust features and open-source foundation, empowers developers to build and deploy high-quality LLM applications with confidence and efficiency. By addressing the inherent challenges of LLM evaluation, Deepchecks helps pave the way for a future where AI-powered applications are both innovative and reliable.