GET3D: A Generative Model for High-Quality 3D Textured Shapes

GET3D is a groundbreaking generative model developed by the Toronto AI Lab and NVIDIA that produces high-quality, textured 3D meshes directly from 2D images. Unlike previous methods that often lack detail or struggle with complex topologies, GET3D excels at generating diverse and realistic 3D shapes with intricate details and high-fidelity textures.

Key Features and Capabilities

- High-Quality Geometry and Textures: GET3D generates 3D models with rich geometric details and realistic textures, surpassing the capabilities of many existing 3D generative models.

- Complex Topology Support: The model handles shapes with arbitrary topology, allowing for the creation of intricate and detailed 3D objects.

- Direct Mesh Generation: GET3D directly outputs textured meshes, eliminating the need for post-processing steps and making the generated assets immediately usable in 3D rendering engines.

- Disentanglement of Geometry and Texture: The model effectively separates geometry and texture information in its latent space, enabling independent manipulation and control.

- Latent Code Interpolation: Smooth transitions between different shapes are possible through interpolation in the latent space.

- Text-Guided Generation (with fine-tuning): While the core model is unsupervised, fine-tuning with CLIP allows for text-guided generation, enabling users to specify desired characteristics.

- Unsupervised Material Generation (with DIBR++): When combined with DIBR++, GET3D can generate materials and realistic view-dependent lighting effects without explicit supervision.

How GET3D Works

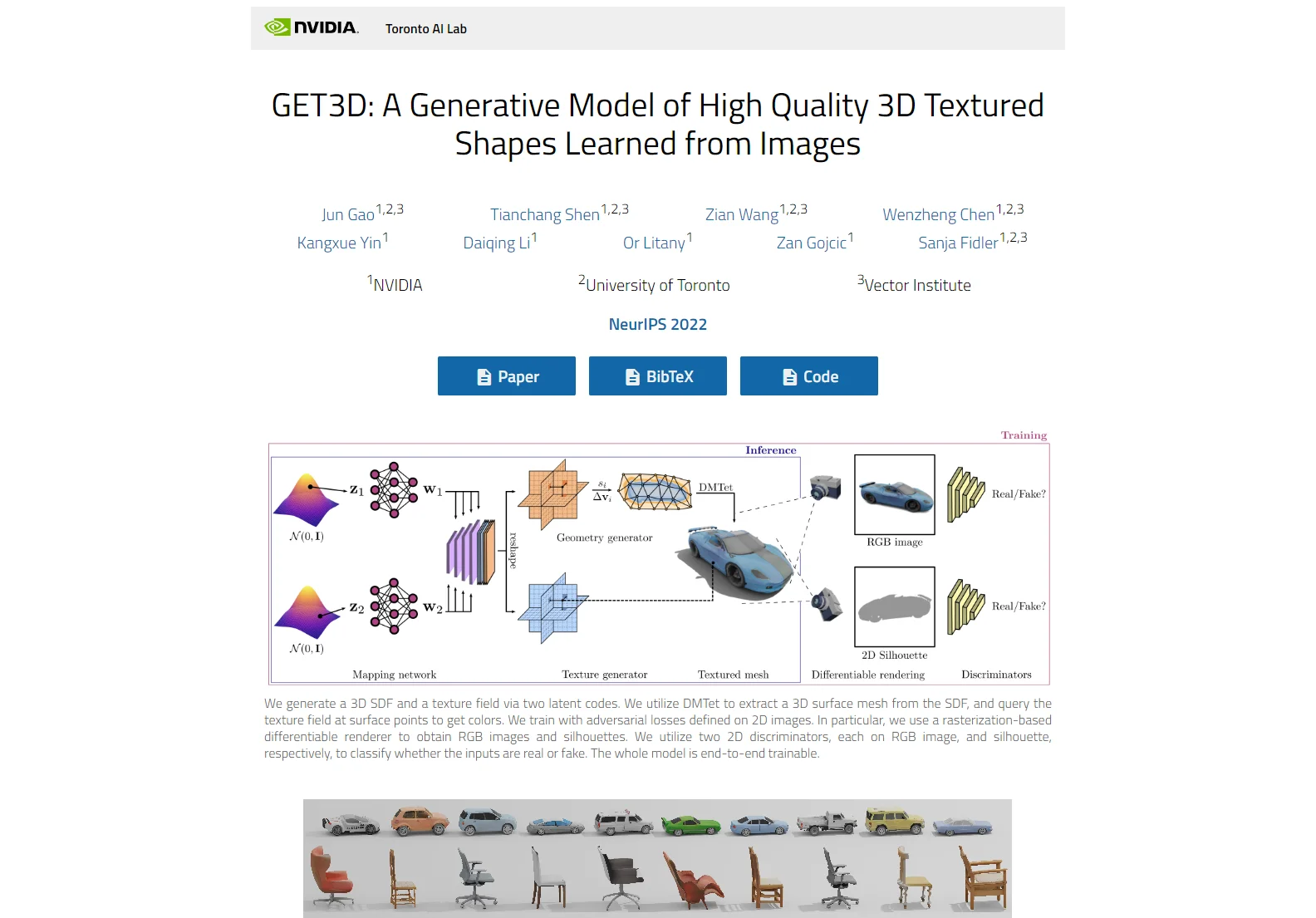

GET3D leverages a novel approach that combines differentiable surface modeling, differentiable rendering, and 2D Generative Adversarial Networks (GANs). It generates a 3D signed distance function (SDF) and a texture field using two latent codes. A differentiable renderer then creates 2D images and silhouettes from the 3D representation, which are used to train the model with adversarial losses. This end-to-end trainable system allows for efficient and effective learning from 2D image datasets.

Applications

GET3D has significant potential across various industries, including:

- Game Development: Creating realistic and diverse 3D assets for video games.

- Virtual Reality (VR) and Augmented Reality (AR): Generating high-quality 3D content for immersive experiences.

- Film and Animation: Producing realistic characters, environments, and objects for visual effects.

- Architectural Visualization: Creating detailed 3D models of buildings and structures.

- Product Design: Designing and visualizing new products in 3D.

Comparisons to Other Methods

GET3D significantly improves upon previous 3D generative models by offering superior quality, detail, and support for complex topologies. Unlike methods relying on neural rendering, GET3D's direct mesh generation makes its output readily compatible with standard 3D software pipelines.

Conclusion

GET3D represents a significant advancement in 3D generative modeling. Its ability to generate high-quality, textured 3D meshes with complex topologies opens up exciting possibilities for various applications. The model's innovative approach and impressive results make it a valuable tool for researchers and practitioners alike.