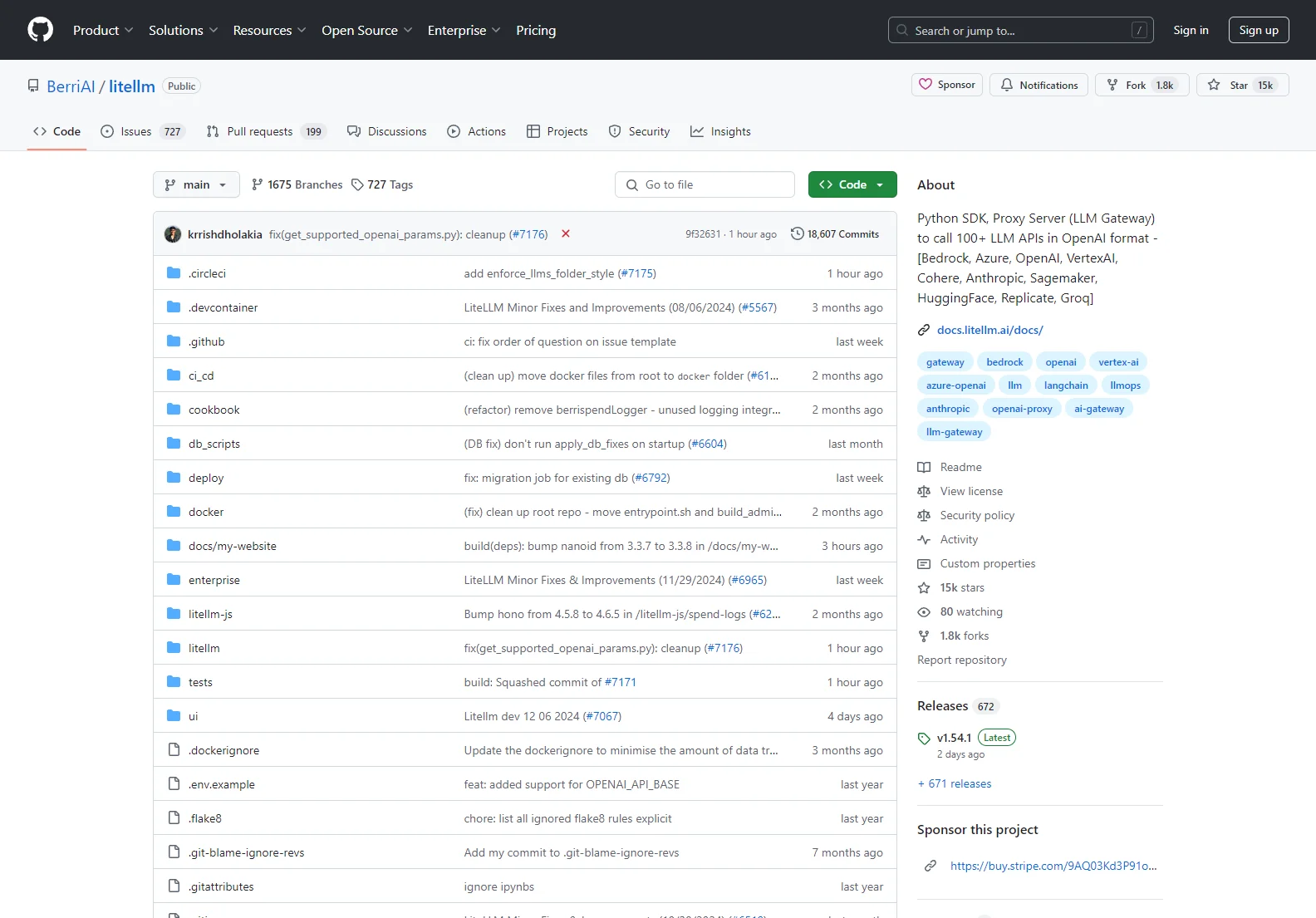

LiteLLM: A Universal Python SDK and Proxy for 100+ Large Language Models

LiteLLM is a powerful and versatile Python SDK and proxy server that simplifies interaction with over 100 different Large Language Model (LLM) APIs. It presents a unified interface, allowing developers to seamlessly switch between various providers like OpenAI, Azure, Google Vertex AI, Cohere, Anthropic, and many more, all while using a consistent OpenAI-compatible format. This significantly reduces the complexity of integrating multiple LLMs into your applications.

Key Features

- Unified API: Interact with diverse LLMs using a single, consistent API based on the familiar OpenAI format. This simplifies development and reduces the learning curve.

- Extensive Provider Support: Access a wide range of LLMs from providers such as OpenAI, Azure, Google Vertex AI, Bedrock, Hugging Face, Cohere, Anthropic, SageMaker, and more. The constantly expanding list ensures compatibility with the latest and greatest LLMs.

- Proxy Server (LLM Gateway): The included proxy server acts as a central gateway, managing requests, handling retries, and providing features like rate limiting and cost tracking. This enhances reliability and control over your LLM interactions.

- Streaming Support: Enjoy real-time streaming of LLM responses, enabling more interactive and dynamic applications. This feature is supported across all integrated providers.

- Asynchronous Operations: Perform asynchronous calls for improved efficiency and responsiveness in your applications.

- Logging and Observability: Integrate with various logging and observability tools like Lunary, Langfuse, and more, providing valuable insights into your LLM usage and performance.

- Cost Management: Set budgets and rate limits to control spending and prevent unexpected costs.

- OpenAI Compatibility: LiteLLM is designed to be highly compatible with the OpenAI API, making it easy to transition existing OpenAI-based applications.

- Easy Installation: Simple installation via pip:

pip install litellm

Use Cases

LiteLLM's versatility makes it suitable for a wide range of applications, including:

- Chatbots: Build sophisticated chatbots that leverage the strengths of different LLMs.

- Content Generation: Create various types of content, such as articles, summaries, and creative text formats.

- Code Generation and Completion: Assist developers with code generation and completion tasks.

- Translation: Perform accurate and efficient translations between multiple languages.

- Custom AI Applications: Integrate LLMs into any application requiring natural language processing capabilities.

Comparison with Other Solutions

While other libraries offer LLM interaction, LiteLLM distinguishes itself through its comprehensive support for numerous providers, its unified API, and its built-in proxy server for enhanced management and control. This simplifies development and reduces the overhead associated with managing multiple LLM APIs.

Getting Started

- Installation:

pip install litellm - API Key Setup: Set environment variables for your API keys from each provider (e.g.,

OPENAI_API_KEY,AZURE_OPENAI_API_KEY, etc.). - Making a Request:

from litellm import completion

import os

os.environ["OPENAI_API_KEY"] = "your-openai-key"

messages = [{

"content": "Hello, how are you?",

"role": "user"

}]

response = completion(model="gpt-3.5-turbo", messages=messages)

print(response)

Conclusion

LiteLLM is a valuable tool for developers looking to simplify their interactions with a wide range of LLMs. Its unified API, proxy server, and extensive features make it a powerful and efficient solution for building AI-powered applications.