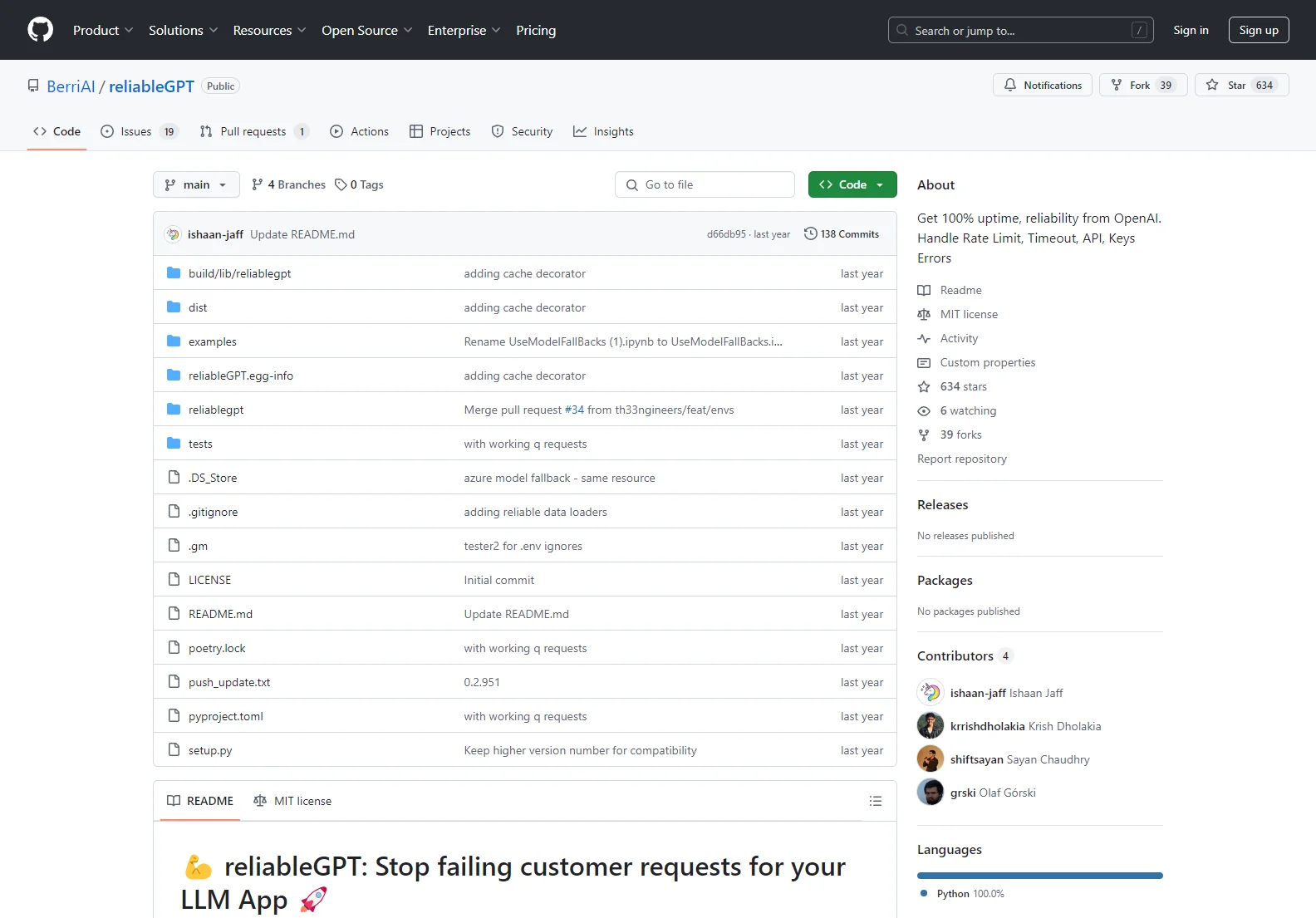

reliableGPT: Revolutionizing LLM App Reliability

reliableGPT is a powerful Python library designed to significantly enhance the reliability of your Large Language Model (LLM) applications. It tackles common issues like rate limits, timeouts, API key errors, and context window limitations, ensuring consistent performance and minimizing dropped requests. This is achieved through a multi-pronged approach involving model fallback strategies, caching, and intelligent error handling.

Key Features

- Model Fallback: If a request fails, reliableGPT automatically retries using alternative models (e.g., switching from

gpt-3.5-turbotogpt-4ortext-davinci-003). You can customize the fallback order to prioritize specific models based on your needs and costs. - Context Window Error Handling: Automatically retries requests that fail due to context window limitations by using models with larger context windows.

- API Key Rotation: Handles invalid API key errors by seamlessly rotating through a list of backup keys, preventing interruptions due to key rotation or accidental invalidations. This ensures continuous operation even if one key becomes unusable.

- Caching: Implements a caching mechanism (using Supabase for persistence) to store responses. This acts as a fallback for situations where retries fail, ensuring that users always receive a response, even under heavy load or temporary API disruptions. Cosine similarity is used for efficient semantic-based retrieval of cached responses.

- Azure OpenAI Integration: Seamlessly integrates with Azure OpenAI, allowing you to define fallback strategies between Azure and OpenAI endpoints. This provides redundancy and resilience in case one platform experiences issues.

- Rate Limit Management: Handles rate limits by intelligently pacing requests to stay within your API usage limits.

- Comprehensive Error Handling: Provides detailed error logging and reporting, allowing you to monitor the health and performance of your LLM application.

- User-Friendly Interface: The library is designed with a simple and intuitive API, making it easy to integrate into existing projects.

Use Cases

- Production-Ready LLM Apps: Ensure your application remains available and responsive even during peak demand or API outages.

- Multi-Model Deployments: Easily manage and switch between multiple LLM providers and models.

- High-Traffic Applications: Maintain consistent performance and minimize dropped requests in high-traffic scenarios.

- Improved User Experience: Provide a seamless and reliable experience for your users, even in the face of unexpected errors.

Getting Started

Installation is straightforward using pip:

pip install reliableGPT

Integration is typically a single line of code:

from reliablegpt import reliableGPT

openai.ChatCompletion.create = reliableGPT(openai.ChatCompletion.create, user_email='your_email@example.com')

Remember to replace 'your_email@example.com' with your actual email address. This allows reliableGPT to send you alerts about potential issues.

Advanced Usage

reliableGPT offers several advanced features, including custom fallback strategies, backup API keys, and caching configuration. Refer to the official documentation for detailed instructions and examples.

Comparison with Other Libraries

While other libraries offer some aspects of error handling, reliableGPT distinguishes itself through its comprehensive approach, combining model fallback, caching, API key management, and detailed error reporting into a single, easy-to-use package. This makes it a superior choice for building robust and reliable LLM applications.

Conclusion

reliableGPT is an essential tool for developers building production-ready LLM applications. Its focus on reliability, error handling, and ease of use makes it a valuable asset for ensuring consistent performance and minimizing downtime.